1. Quantum Computation

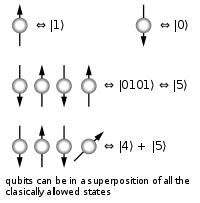

A quantum computer (also known as a quantum supercomputer) is a computation device that makes direct use of quantum-mechanical phenomena, such as superposition and entanglement, to perform operations on data. Quantum computers are different from digital computers based on transistors. Whereas digital computers require data to be encoded into binary digits (bits), each of which is always in one of two definite states (0 or 1), quantum computation uses qubits (quantum bits), which can be in superpositions of states. A theoretical model is the quantum Turing machine, also known as the universal quantum computer. Quantum computers share theoretical similarities with non-deterministic and probabilistic computers; one example is the ability to be in more than one state simultaneously. The field of quantum computing was first introduced by Yuri Manin in 1980 and Richard Feynman in 1982. A quantum computer with spins as quantum bits was also formulated for use as a quantum space–time in 1969.

As of 2014 quantum computing is still in its infancy but experiments have been carried out in which quantum computational operations were executed on a very small number of qubits. Both practical and theoretical research continues, and many national governments and military funding agencies support quantum computing research to develop quantum computers for both civilian and national security purposes, such as cryptanalysis.

Large-scale quantum computers will be able to solve certain problems much more quickly than any classical computer using the best currently known algorithms, like integer factorization using Shor's algorithm or the simulation of quantum many-body systems. There existquantum algorithms, such as Simon's algorithm, which run faster than any possible probabilistic classical algorithm.Given sufficient computational resources, however, a classical computer could be made to simulate any quantum algorithm; quantum computation does not violate the Church–Turing thesis.

2. Entanglement

Quantum entanglement is a physical phenomenon that occurs when pairs or groups of particles are generated or interact in ways such that the quantum state of each particle cannot be described independently – instead, a quantum state may be given for the system as a whole.

Measurements of physical properties such as position, momentum, spin, polarization, etc. performed on entangled particles are found to be appropriately correlated. For example, if a pair of particles is generated in such a way that their total spin is known to be zero, and one particle is found to have clockwise spin on a certain axis, then the spin of the other particle, measured on the same axis, will be found to be counterclockwise. Because of the nature of quantum measurement, however, this behavior gives rise to effects that can appear paradoxical: any measurement of a property of a particle can be seen as acting on that particle (e.g. by collapsing a number of superimposed states); and in the case of entangled particles, such action must be on the entangled system as a whole. It thus appears that one particle of an entangled pair "knows" what measurement has been performed on the other, and with what outcome, even though there is no known means for such information to be communicated between the particles, which at the time of measurement may be separated by arbitrarily large distances.

Such phenomena were the subject of a 1935 paper by Albert Einstein, Boris Podolsky and Nathan Rosen, describing what came to be known as the EPR paradox, and several papers by Erwin Schrödinger shortly thereafter. Einstein and others considered such behavior to be impossible, as it violated the local realist view of causality (Einstein referred to it as "spooky action at a distance"), and argued that the accepted formulation of quantum mechanics must therefore be incomplete. Later, however, the counterintuitive predictions of quantum mechanics were verified experimentally. Experiments have been performed involving measuring the polarization or spin of entangled particles in different directions, which – by producing violations of Bell's inequality – demonstrate statistically that the local realist view cannot be correct. This has been shown to occur even when the measurements are performed more quickly than light could travel between the sites of measurement: there is no lightspeed or slower influence that can pass between the entangled particles. Recent experiments have measured entangled particles within less than one part in 10,000 of the light travel time between them. According to the formalism of quantum theory, the effect of measurement happens instantly. It is not possible, however, to use this effect to transmit classical information at faster-than-light speeds (see Faster-than-light → Quantum mechanics).

Quantum entanglement is an area of extremely active research by the physics community, and its effects have been demonstrated experimentally with photons, electrons, molecules the size of buckyballs, and even small diamonds. Research is also focused on the utilization of entanglement effects in communication and computation.

3. Qubit Data

A quantum computer with a given number of qubits is fundamentally different from a classical computer composed of the same number of classical bits. For example, to represent the state of an n-qubit system on a classical computer would require the storage of 2n complex coefficients. Although this fact may seem to indicate that qubits can hold exponentially more information than their classical counterparts, care must be taken not to overlook the fact that the qubits are only in a probabilistic superposition of all of their states. This means that when the final state of the qubits is measured, they will only be found in one of the possible configurations they were in before measurement. Moreover, it is incorrect to think of the qubits as only being in one particular state before measurement since the fact that they were in a superposition of states before the measurement was made directly affects the possible outcomes of the computation.

For example: Consider first a classical computer that operates on a three-bit register. The state of the computer at any time is a probability distribution over the  different three-bit strings 000, 001, 010, 011, 100, 101, 110, 111. If it is a deterministic computer, then it is in exactly one of these states with probability 1. However, if it is a probabilistic computer, then there is a possibility of it being in any one of a number of different states. We can describe this probabilistic state by eight nonnegative numbers A,B,C,D,E,F,G,H (where A = probability computer is in state 000, B = probability computer is in state 001, etc.). There is a restriction that these probabilities sum to 1.

different three-bit strings 000, 001, 010, 011, 100, 101, 110, 111. If it is a deterministic computer, then it is in exactly one of these states with probability 1. However, if it is a probabilistic computer, then there is a possibility of it being in any one of a number of different states. We can describe this probabilistic state by eight nonnegative numbers A,B,C,D,E,F,G,H (where A = probability computer is in state 000, B = probability computer is in state 001, etc.). There is a restriction that these probabilities sum to 1.

different three-bit strings 000, 001, 010, 011, 100, 101, 110, 111. If it is a deterministic computer, then it is in exactly one of these states with probability 1. However, if it is a probabilistic computer, then there is a possibility of it being in any one of a number of different states. We can describe this probabilistic state by eight nonnegative numbers A,B,C,D,E,F,G,H (where A = probability computer is in state 000, B = probability computer is in state 001, etc.). There is a restriction that these probabilities sum to 1.

different three-bit strings 000, 001, 010, 011, 100, 101, 110, 111. If it is a deterministic computer, then it is in exactly one of these states with probability 1. However, if it is a probabilistic computer, then there is a possibility of it being in any one of a number of different states. We can describe this probabilistic state by eight nonnegative numbers A,B,C,D,E,F,G,H (where A = probability computer is in state 000, B = probability computer is in state 001, etc.). There is a restriction that these probabilities sum to 1.

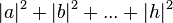

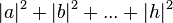

The state of a three-qubit quantum computer is similarly described by an eight-dimensional vector (a,b,c,d,e,f,g,h), called a ket. Here, however, the coefficients can have complex values, and it is the sum of the squares of the coefficients' magnitudes,  , that must equal 1. These square magnitudes represent the probability amplitudes of given states. However, because a complex number encodes not just a magnitude but also a direction in the complex plane, the phase difference between any two coefficients (states) represents a meaningful parameter. This is a fundamental difference between quantum computing and probabilistic classical computing.[11]

, that must equal 1. These square magnitudes represent the probability amplitudes of given states. However, because a complex number encodes not just a magnitude but also a direction in the complex plane, the phase difference between any two coefficients (states) represents a meaningful parameter. This is a fundamental difference between quantum computing and probabilistic classical computing.[11]

, that must equal 1. These square magnitudes represent the probability amplitudes of given states. However, because a complex number encodes not just a magnitude but also a direction in the complex plane, the phase difference between any two coefficients (states) represents a meaningful parameter. This is a fundamental difference between quantum computing and probabilistic classical computing.[11]

, that must equal 1. These square magnitudes represent the probability amplitudes of given states. However, because a complex number encodes not just a magnitude but also a direction in the complex plane, the phase difference between any two coefficients (states) represents a meaningful parameter. This is a fundamental difference between quantum computing and probabilistic classical computing.[11]

If you measure the three qubits, you will observe a three-bit string. The probability of measuring a given string is the squared magnitude of that string's coefficient (i.e., the probability of measuring 000 =  , the probability of measuring 001 =

, the probability of measuring 001 =  , etc..). Thus, measuring a quantum state described by complex coefficients (a,b,...,h) gives the classical probability distribution

, etc..). Thus, measuring a quantum state described by complex coefficients (a,b,...,h) gives the classical probability distribution  and we say that the quantum state "collapses" to a classical state as a result of making the measurement.

and we say that the quantum state "collapses" to a classical state as a result of making the measurement.

, the probability of measuring 001 =

, the probability of measuring 001 =  , etc..). Thus, measuring a quantum state described by complex coefficients (a,b,...,h) gives the classical probability distribution

, etc..). Thus, measuring a quantum state described by complex coefficients (a,b,...,h) gives the classical probability distribution  and we say that the quantum state "collapses" to a classical state as a result of making the measurement.

and we say that the quantum state "collapses" to a classical state as a result of making the measurement.

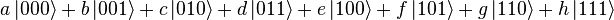

Note that an eight-dimensional vector can be specified in many different ways depending on what basis is chosen for the space. The basis of bit strings (e.g., 000, 001, ..., 111) is known as the computational basis. Other possible bases are unit-length, orthogonal vectors and the eigenvectors of the Pauli-x operator. Ket notation is often used to make the choice of basis explicit. For example, the state (a,b,c,d,e,f,g,h) in the computational basis can be written as:

- where, e.g.,

The computational basis for a single qubit (two dimensions) is  and

and  .

.

and

and  .

.

Using the eigenvectors of the Pauli-x operator, a single qubit is  and

and  .

.

and

and  .

.Operation

While a classical three-bit state and a quantum three-qubit state are both eight-dimensional vectors, they are manipulated quite differently for classical or quantum computation. For computing in either case, the system must be initialized, for example into the all-zeros string,  , corresponding to the vector (1,0,0,0,0,0,0,0). In classical randomized computation, the system evolves according to the application of stochastic matrices, which preserve that the probabilities add up to one (i.e., preserve the L1 norm). In quantum computation, on the other hand, allowed operations are unitary matrices, which are effectively rotations (they preserve that the sum of the squares add up to one, the Euclidean or L2 norm). (Exactly what unitaries can be applied depend on the physics of the quantum device.) Consequently, since rotations can be undone by rotating backward, quantum computations are reversible. (Technically, quantum operations can be probabilistic combinations of unitaries, so quantum computation really does generalize classical computation. See quantum circuit for a more precise formulation.)

, corresponding to the vector (1,0,0,0,0,0,0,0). In classical randomized computation, the system evolves according to the application of stochastic matrices, which preserve that the probabilities add up to one (i.e., preserve the L1 norm). In quantum computation, on the other hand, allowed operations are unitary matrices, which are effectively rotations (they preserve that the sum of the squares add up to one, the Euclidean or L2 norm). (Exactly what unitaries can be applied depend on the physics of the quantum device.) Consequently, since rotations can be undone by rotating backward, quantum computations are reversible. (Technically, quantum operations can be probabilistic combinations of unitaries, so quantum computation really does generalize classical computation. See quantum circuit for a more precise formulation.)

Finally, upon termination of the algorithm, the result needs to be read off. In the case of a classical computer, we sample from the probability distribution on the three-bit register to obtain one definite three-bit string, say 000. Quantum mechanically, we measure the three-qubit state, which is equivalent to collapsing the quantum state down to a classical distribution (with the coefficients in the classical state being the squared magnitudes of the coefficients for the quantum state, as described above), followed by sampling from that distribution. Note that this destroys the original quantum state. Many algorithms will only give the correct answer with a certain probability. However, by repeatedly initializing, running and measuring the quantum computer, the probability of getting the correct answer can be increased.

For more details on the sequences of operations used for various quantum algorithms, see universal quantum computer, Shor's algorithm, Grover's algorithm, Deutsch-Jozsa algorithm, amplitude amplification, quantum Fourier transform, quantum gate, quantum adiabatic algorithm and quantum error correction.

4. Quantum Gates

In quantum computing and specifically the quantum circuit model of computation, a quantum gate (or quantum logic gate) is a basic quantum circuit operating on a small number of qubits. They are the building blocks of quantum circuits, like classical logic gates are for conventional digital circuits.

Unlike many classical logic gates, quantum logic gates are reversible. However, classical computing can be performed using only reversible gates. For example, the reversibleToffoli gate can implement all Boolean functions. This gate has a direct quantum equivalent, showing that quantum circuits can perform all operations performed by classical circuits.

Quantum logic gates are represented by unitary matrices. The most common quantum gates operate on spaces of one or two qubits, just like the common classical logic gates operate on one or two bits. This means that as matrices, quantum gates can be described by 2 × 2 or 4 × 4 unitary matrices.

5. Shor's Algorithm

Shor's algorithm, named after mathematician Peter Shor, is a quantum algorithm (an algorithm that runs on a quantum computer) for integer factorization formulated in 1994. Informally it solves the following problem: Given an integer N, find its prime factors.

On a quantum computer, to factor an integer N, Shor's algorithm runs in polynomial time (the time taken is polynomial in log N, which is the size of the input). Specifically it takes time O((log N)3), demonstrating that the integer factorization problem can be efficiently solved on a quantum computer and is thus in the complexity class BQP. This is substantially faster than the most efficient known classical factoring algorithm, the general number field sieve, which works in sub-exponential time — aboutO(e1.9 (log N)1/3 (log log N)2/3). The efficiency of Shor's algorithm is due to the efficiency of the quantum Fourier transform, and modular exponentiation by repeated squarings.

If a quantum computer with a sufficient number of qubits could operate without succumbing to noise and other quantum decoherence phenomena, Shor's algorithm could be used to break public-key cryptography schemes such as the widely used RSA scheme. RSA is based on the assumption that factoring large numbers is computationally infeasible. So far as is known, this assumption is valid for classical (non-quantum) computers; no classical algorithm is known that can factor in polynomial time. However, Shor's algorithm shows that factoring is efficient on an ideal quantum computer, so it may be feasible to defeat RSA by constructing a large quantum computer. It was also a powerful motivator for the design and construction of quantum computers and for the study of new quantum computer algorithms. It has also facilitated research on new cryptosystems that are secure from quantum computers, collectively called post-quantum cryptography.

In 2001, Shor's algorithm was demonstrated by a group at IBM, who factored 15 into 3 × 5, using an NMR implementation of a quantum computer with 7 qubits. However, some doubts have been raised as to whether IBM's experiment was a true demonstration of quantum computation, since no entanglement was observed. Since IBM's implementation, several other groups have implemented Shor's algorithm using photonic qubits, emphasizing that entanglement was observed. In 2012, the factorization of 15 was repeated. Also in 2012, the factorization of 21 was achieved, setting the record for the largest number factored with a quantum computer.In April 2012, the factorization of 143 was achieved, although this used adiabatic quantum computation rather than Shor's algorithm

references :

http://en.wikipedia.org/wiki/Quantum_entanglementhttp://en.wikipedia.org/wiki/Quantum_gate

http://en.wikipedia.org/wiki/Quantum_computer

http://en.wikipedia.org/wiki/Shor's_algorithm